【容器应用系列教程】Kubernetes集群部署(Centos7)

基于

Kubernetes1.20.7部署高可用集群搭建教程:https://www.wsjj.top/archives/kubernetes

基于Kubernetes1.25.11集群部署教程Docker作为容器引擎(Centos7):https://www.wsjj.top/archives/163

一、环境规划

1.主机规划

| 主机名 | IP地址 | 配置 | 节点 |

|---|---|---|---|

| k8s-master.linux.com | 192.168.140.10 | 最低2c2g | Master节点 |

| k8s-node01.linux.com | 192.168.140.11 | 最低2c2g | Node节点 |

| k8s-node02.linux.com | 192.168.140.12 | 最低2c2g | Node节点 |

2.软件规划

kubernetes-1.20.7版本docker-19.03版本

3.网段规划

pod网段:192.168.0.0/16service网段:172.16.0.0/16

二、前期准备(重要)

1.三台服务器关闭防火墙和SElinux、配置时间同步

过程省略

安装ntpdate,yum install -y ntpdate

2.配置计划任务

[root@k8s-master ~]# crontab -e

*/30 * * * * /usr/sbin/ntpdate 120.25.115.20 &> /dev/null

[root@k8s-node01 ~]# crontab -e

*/30 * * * * /usr/sbin/ntpdate 120.25.115.20 &> /dev/null

[root@k8s-node02 ~]# crontab -e

*/30 * * * * /usr/sbin/ntpdate 120.25.115.20 &> /dev/null

3.所有主机关闭SSH的DNS解析(优化SSH连接速度)

[root@k8s-master ~]# sed -ri 's|#UseDNS yes|UseDNS no|' /etc/ssh/sshd_config

[root@k8s-master ~]# systemctl restart sshd

[root@k8s-node01 ~]# sed -ri 's|#UseDNS yes|UseDNS no|' /etc/ssh/sshd_config

[root@k8s-node01 ~]# systemctl restart sshd

[root@k8s-node02 ~]# sed -ri 's|#UseDNS yes|UseDNS no|' /etc/ssh/sshd_config

[root@k8s-node02 ~]# systemctl restart sshd

4.所有主机配置免密SSH(重要)

[root@k8s-master ~]# ssh-keygen -t rsa

[root@k8s-master ~]# mv /root/.ssh/id_rsa.pub /root/.ssh/authorized_keys

[root@k8s-master ~]# scp -r /root/.ssh/ root@192.168.140.11:/root/

[root@k8s-master ~]# scp -r /root/.ssh/ root@192.168.140.12:/root/

测试免密SSH和查看时间同步

[root@k8s-master ~]# for i in 10 11 12

> do

> ssh root@192.168.140.$i hostname; date

> done

k8s-master.linux.com

2023年 06月 09日 星期五 11:45:15 CST

k8s-node01.linux.com

2023年 06月 09日 星期五 11:45:15 CST

k8s-node02.linux.com

2023年 06月 09日 星期五 11:45:16 CST

5.所有主机添加主机名解

[root@k8s-master ~]# vim /etc/hosts

#添加以下内容

192.168.140.10 k8s-master.linux.com k8s-master

192.168.140.11 k8s-node01.linux.com k8s-node01

192.168.140.12 k8s-node02.linux.com k8s-node02

[root@k8s-master ~]# scp -r /etc/hosts root@192.168.140.11:/etc/

hosts 100% 299 130.0KB/s 00:00

[root@k8s-master ~]# scp -r /etc/hosts root@192.168.140.12:/etc/

hosts 100% 299 200.6KB/s 00:00

6.所有主机禁用SWAP分区

[root@k8s-master ~]# for i in 10 11 12

> do

> ssh root@192.168.140.$i swapoff -a

> ssh root@192.168.140.$i free -m

> ssh root@192.168.140.$i sysctl -w vm.swappiness=0

> done

注释掉SWAP相关挂载

[root@k8s-master ~]# vim /etc/fstab

# /dev/mapper/centos-swap swap swap defaults 0 0

[root@k8s-node01 ~]# vim /etc/fstab

# /dev/mapper/centos-swap swap swap defaults 0 0

[root@k8s-node02 ~]# vim /etc/fstab

# /dev/mapper/centos-swap swap swap defaults 0 0

7.所有主机调整资源限制

[root@k8s-master ~]# for i in 10 11 12

> do

> ssh root@192.168.140.$i ulimit -SHn 65535

> done

nofile最大文件数nproc最大进程数memlock内页的页面锁

[root@k8s-master ~]# vim /etc/security/limits.conf

#在文件末尾添加以下内容

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

[root@k8s-node01 ~]# vim /etc/security/limits.conf

#在文件末尾添加以下内容

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

[root@k8s-node02 ~]# vim /etc/security/limits.conf

#在文件末尾添加以下内容

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

8.配置软件安装源

[root@k8s-master ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@k8s-master ~]# wget -O /etc/yum.repos.d/epel.repo https://mirrors.aliyun.com/repo/epel-7.repo

[root@k8s-master ~]# vim /etc/yum.repos.d/docker.repo

[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-stable-debuginfo]

name=Docker CE Stable - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/stable

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-stable-source]

name=Docker CE Stable - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/stable

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test]

name=Docker CE Test - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test-debuginfo]

name=Docker CE Test - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test-source]

name=Docker CE Test - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly]

name=Docker CE Nightly - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly-debuginfo]

name=Docker CE Nightly - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly-source]

name=Docker CE Nightly - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[root@k8s-master ~]# vim /etc/yum.repos.d/k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

把所有软件安装源拷贝给另外2台机器

[root@k8s-master ~]# for i in 11 12

> do

> scp -r /etc/yum.repos.d/*.repo root@192.168.140.$i:/etc/yum.repos.d/

> done

建立YUM缓存

[root@k8s-master ~]# yum clean all && yum makecache fast

[root@k8s-node01 ~]# yum clean all && yum makecache fast

[root@k8s-node02 ~]# yum clean all && yum makecache fast

9.将系统升级到最新版

[root@k8s-master ~]# yum update -y

[root@k8s-node01 ~]# yum update -y

[root@k8s-node02 ~]# yum update -y

[root@k8s-master ~]# init 6

[root@k8s-node01 ~]# init 6

[root@k8s-node02 ~]# init 6

10.升级内核(可选的)

关于

Centos7升级内核教程:https://www.wsjj.top/archives/kernel

三、调整系统参数

1.所有主机安装IPVS

[root@k8s-master ~]# yum install ipvsadm ipset sysstat conntrack libseccomp -y

[root@k8s-node01 ~]# yum install ipvsadm ipset sysstat conntrack libseccomp -y

[root@k8s-node02 ~]# yum install ipvsadm ipset sysstat conntrack libseccomp -y

所有主机加载IPVS模块

[root@k8s-master ~]# vim /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack_ipv4 #如果使用高版本内核5.x版本,请更名为nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

[root@k8s-master ~]# scp -r /etc/modules-load.d/ipvs.conf root@192.168.140.11:/etc/modules-load.d/

ipvs.conf 100% 211 401.5KB/s 00:00

[root@k8s-master ~]# scp -r /etc/modules-load.d/ipvs.conf root@192.168.140.12:/etc/modules-load.d/

ipvs.conf 100% 211 309.1KB/s 00:00

[root@k8s-master ~]# systemctl enable --now systemd-modules-load

[root@k8s-node01 ~]# systemctl enable --now systemd-modules-load

[root@k8s-node02 ~]# systemctl enable --now systemd-modules-load

2.所有主机调整内核参数

[root@k8s-master ~]# vim /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

[root@k8s-master ~]# scp -r /etc/sysctl.d/k8s.conf root@192.168.140.11:/etc/sysctl.d/

k8s.conf 100% 704 1.4MB/s 00:00

[root@k8s-master ~]# scp -r /etc/sysctl.d/k8s.conf root@192.168.140.12:/etc/sysctl.d/

k8s.conf 100% 704 1.0MB/s 00:00

[root@k8s-master ~]# sysctl --system

[root@k8s-node01 ~]# sysctl --system

[root@k8s-node02 ~]# sysctl --system

四、所有主机安装需要的软件

1.所有主机安装Docker-ce-19.03

[root@k8s-master ~]# yum install -y docker-ce-19.03*

[root@k8s-node01 ~]# yum install -y docker-ce-19.03*

[root@k8s-node02 ~]# yum install -y docker-ce-19.03*

[root@k8s-master ~]# systemctl enable --now docker

[root@k8s-node01 ~]# systemctl enable --now docker

[root@k8s-node02 ~]# systemctl enable --now docker

所有Docker配置镜像加速

[root@k8s-master ~]# vim /etc/docker/daemon.json

{

"registry-mirrors": ["http://hub-mirror.c.163.com",

"https://docker.mirrors.ustc.edu.cn"]

}

[root@k8s-master ~]# scp -r /etc/docker/daemon.json root@192.168.140.11:/etc/docker/

daemon.json 100% 94 140.1KB/s 00:00

[root@k8s-master ~]# scp -r /etc/docker/daemon.json root@192.168.140.12:/etc/docker/

daemon.json 100% 94 43.8KB/s 00:00

[root@k8s-master ~]# systemctl restart docker

[root@k8s-node01 ~]# systemctl restart docker

[root@k8s-node02 ~]# systemctl restart docker

2.所有主机安装Kubernetes

[root@k8s-master ~]# yum install -y kubeadm-1.20.7 kubelet-1.20.7 kubectl-1.20.7

[root@k8s-node01 ~]# yum install -y kubeadm-1.20.7 kubelet-1.20.7 kubectl-1.20.7

[root@k8s-node02 ~]# yum install -y kubeadm-1.20.7 kubelet-1.20.7 kubectl-1.20.7

修改Kubernetes镜像地址

[root@k8s-master ~]# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.2"

[root@k8s-master ~]# scp -r /etc/sysconfig/kubelet root@192.168.140.11:/etc/sysconfig/

kubelet 100% 117 106.7KB/s 00:00

[root@k8s-master ~]# scp -r /etc/sysconfig/kubelet root@192.168.140.12:/etc/sysconfig/

kubelet 100% 117 139.2KB/s 00:00

[root@k8s-master ~]# systemctl enable --now kubelet

[root@k8s-node01 ~]# systemctl enable --now kubelet

[root@k8s-node02 ~]# systemctl enable --now kubelet

五、Kubernetes集群初始化

1.在Master节点准备初始化文件

[root@k8s-master ~]# kubeadm config print init-defaults > new.yaml

[root@k8s-master ~]# vim new.yaml

#配置文件并不完整,仅展示修改部分

localAPIEndpoint:

advertiseAddress: 192.168.140.10 #指定API server监听地址

bindPort: 6443

apiServer:

certSANs: #手动添加此行内容

- 192.168.140.10 #指定证书颁发服务器地址

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.140.10:6443 #指定证书服务器地址,此行需要手动添加

controllerManager: {}

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #修改镜像下载地址为国内地址

kind: ClusterConfiguration

kubernetesVersion: v1.20.7 #指定kubernetes版本

networking:

dnsDomain: cluster.local

podSubnet: 10.88.0.0/16 #根据规划,这里填写pod网段,此段需要手动添加

serviceSubnet: 172.16.0.0/16 #根据规划,这里修改网段

scheduler: {}

2.在Master节点下载初始化需要的镜像

[root@k8s-master ~]# kubeadm config images pull --config /root/new.yaml

3.在Master节点初始化集群

注意:这里生成的信息,每个人的都不一样!

[root@k8s-master ~]# kubeadm init --config /root/new.yaml --upload-certs

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf #配置环境变量

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

#当集群存在多个Master节点的时候,使用这条命令

kubeadm join 192.168.140.10:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:9f7a17bdb794de39d777c0a0301f5979ce1faeee6df833223d455c8c45728ec0 \

--control-plane --certificate-key 4d69a847667698d461dbe235ed30b8fbd973d430f2ec8ee89f2b064a4183c714

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

#node节点加入master节点用到的命令

kubeadm join 192.168.140.10:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:9f7a17bdb794de39d777c0a0301f5979ce1faeee6df833223d455c8c45728ec0

4.定义环境变量

[root@k8s-master ~]# vim /etc/profile

#在文件末尾添加内容

export KUBECONFIG=/etc/kubernetes/admin.conf

[root@k8s-master ~]# source /etc/profile

5.将node工作节点加入集群

[root@k8s-node01 ~]# kubeadm join 192.168.140.10:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:9f7a17bdb794de39d777c0a0301f5979ce1faeee6df833223d455c8c45728ec0

[root@k8s-node02 ~]# kubeadm join 192.168.140.10:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:9f7a17bdb794de39d777c0a0301f5979ce1faeee6df833223d455c8c45728ec0

6.回到Master节点,查看集群节点状态

由于容器之前是跨物理机的,所以节点与节点之间没有网络连接,所以容器的状态是

NotReady,这需要单独部署跨物理机的网络。

这里可以选择flannel或者calico部署跨物理机通信,本期教程将选择calico

关于flannel部署教程参考:https://www.wsjj.top/archives/133

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master.linux.com NotReady control-plane,master 8m20s v1.20.7

k8s-node01.linux.com NotReady <none> 92s v1.20.7

k8s-node02.linux.com NotReady <none> 84s v1.20.7

[root@k8s-master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-54d67798b7-6ll5q 0/1 Pending 0 113m

kube-system coredns-54d67798b7-sgczg 0/1 Pending 0 113m

kube-system etcd-k8s-master.linux.com 1/1 Running 0 113m

kube-system kube-apiserver-k8s-master.linux.com 1/1 Running 0 113m

kube-system kube-controller-manager-k8s-master.linux.com 1/1 Running 0 113m

kube-system kube-proxy-6pq4t 1/1 Running 0 107m

kube-system kube-proxy-gb66k 1/1 Running 0 113m

kube-system kube-proxy-zcjrc 1/1 Running 0 107m

kube-system kube-scheduler-k8s-master.linux.com 1/1 Running 0 113m

六、部署calico网络,实现容器间的通信

calico基于BGP协议实现通信- 只需要在

master节点上进行操作即可

1.准备calico部署文件

下载连接:https://pan.baidu.com/s/14c8lth005DuGjcsBUCJrOA?pwd=ezn7

2.指定etcd数据库连接地址

以下方式二选其一即可

[root@k8s-master ~]# vim calico-etcd.yaml

#配置文件不完整,仅展示修改部分

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Configure this with the location of your etcd cluster.

etcd_endpoints: "https://192.168.140.10:2379" #指定etcd连接地址

或

[root@k8s-master ~]# sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://192.168.140.10:2379"#g' calico-etcd.yaml

3.指定连接etcd数据库用到的秘钥和证书文件内容

[root@k8s-master ~]# ETCD_CA=`cat /etc/kubernetes/pki/etcd/ca.crt | base64 | tr -d '\n'`

[root@k8s-master ~]# ETCD_CERT=`cat /etc/kubernetes/pki/etcd/server.crt | base64 | tr -d '\n'`

[root@k8s-master ~]# ETCD_KEY=`cat /etc/kubernetes/pki/etcd/server.key | base64 | tr -d '\n'`

[root@k8s-master ~]# sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml

指定证书文件和秘钥文件位置

[root@k8s-master ~]# sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yaml

4.指定calico的通信网段

以下方法任选其一即可

[root@k8s-master ~]# vim calico-etcd.yaml

#配置文件不完整,仅展示修改部分

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR #删除注释,和下面的对齐

value: "192.168.0.0/16" #删除注释,和下面的对齐

# Disable file logging so `kubectl logs` works.

或

[root@k8s-master ~]# sed -ri -e 's| # - name: CALICO_IPV4POOL_CIDR| - name: CALICO_IPV4POOL_CIDR|' -e 's| # value: "192.168.0.0/16"| value: "192.168.0.0/16"|' calico-etcd.yaml

5.部署calico网络

[root@k8s-master ~]# kubectl create -f calico-etcd.yaml

6.查看容器状态

可以看到所有节点变成

ready状态

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master.linux.com Ready control-plane,master 179m v1.20.7

k8s-node01.linux.com Ready <none> 172m v1.20.7

k8s-node02.linux.com Ready <none> 172m v1.20.7

可以看到所有关于

calico的容器都启动了

如果遇到没有进入running状态的容器,请使用kubectl delete pod <name> -n kube-system重试

[root@k8s-master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-5f6d4b864b-2pqz6 1/1 Running 0 12m

kube-system calico-node-fld77 1/1 Running 0 86s

kube-system calico-node-h2kxd 1/1 Running 0 73s

kube-system calico-node-vfltp 1/1 Running 0 62s

kube-system coredns-54d67798b7-6ll5q 0/1 Pending 0 50s

kube-system coredns-54d67798b7-sgczg 0/1 Pending 0 28s

kube-system etcd-k8s-master.linux.com 1/1 Running 0 145m

kube-system kube-apiserver-k8s-master.linux.com 1/1 Running 0 145m

kube-system kube-controller-manager-k8s-master.linux.com 1/1 Running 0 145m

kube-system kube-proxy-6pq4t 1/1 Running 0 138m

kube-system kube-proxy-gb66k 1/1 Running 0 145m

kube-system kube-proxy-zcjrc 1/1 Running 0 138m

kube-system kube-scheduler-k8s-master.linux.com 1/1 Running 0 145m

解决coredns容器启动失败

删除等待自动重新创建即可

[root@k8s-master ~]# kubectl delete pod coredns-54d67798b7-6ll5q -n kube-system

[root@k8s-master ~]# kubectl delete pod coredns-54d67798b7-sgczg -n kube-system

再次查看,变成创建状态

[root@k8s-master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-5f6d4b864b-2pqz6 1/1 Running 0 12m

kube-system calico-node-fld77 1/1 Running 0 78s

kube-system calico-node-h2kxd 1/1 Running 0 65s

kube-system calico-node-vfltp 0/1 Running 0 54s

kube-system coredns-54d67798b7-cbq9r 0/1 ContainerCreating 0 42s

kube-system coredns-54d67798b7-ch5hw 0/1 ContainerCreating 0 20s

kube-system etcd-k8s-master.linux.com 1/1 Running 0 145m

kube-system kube-apiserver-k8s-master.linux.com 1/1 Running 0 145m

kube-system kube-controller-manager-k8s-master.linux.com 1/1 Running 0 145m

kube-system kube-proxy-6pq4t 1/1 Running 0 138m

kube-system kube-proxy-gb66k 1/1 Running 0 144m

kube-system kube-proxy-zcjrc 1/1 Running 0 138m

kube-system kube-scheduler-k8s-master.linux.com 1/1 Running 0 145m

过一会,变成

running状态

[root@k8s-master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-5f6d4b864b-2pqz6 1/1 Running 0 13m

kube-system calico-node-fld77 1/1 Running 0 2m13s

kube-system calico-node-h2kxd 1/1 Running 0 2m

kube-system calico-node-vfltp 1/1 Running 0 109s

kube-system coredns-54d67798b7-cbq9r 1/1 Running 0 97s

kube-system coredns-54d67798b7-ch5hw 1/1 Running 0 75s

kube-system etcd-k8s-master.linux.com 1/1 Running 0 146m

kube-system kube-apiserver-k8s-master.linux.com 1/1 Running 0 146m

kube-system kube-controller-manager-k8s-master.linux.com 1/1 Running 0 146m

kube-system kube-proxy-6pq4t 1/1 Running 0 139m

kube-system kube-proxy-gb66k 1/1 Running 0 145m

kube-system kube-proxy-zcjrc 1/1 Running 0 139m

kube-system kube-scheduler-k8s-master.linux.com 1/1 Running 0 146m

七、安装metric插件(可选的)

搜集、监控

node节点CPU、内存使用情况

1.准备Metric配置文件

下载连接:https://pan.baidu.com/s/14c8lth005DuGjcsBUCJrOA?pwd=ezn7

2.将front-proxy-ca.crt证书拷贝到所有的工作节点

[root@k8s-master ~]# scp /etc/kubernetes/pki/front-proxy-ca.crt root@192.168.140.11:/etc/kubernetes/pki/front-proxy-ca.crt

front-proxy-ca.crt

[root@k8s-master ~]# scp /etc/kubernetes/pki/front-proxy-ca.crt root@192.168.140.11:/etc/kubernetes/pki/front-proxy-ca.crt

front-proxy-ca.crt

3.部署Metric插件

[root@k8s-master ~]# kubectl create -f comp.yaml

4.查看Metric状态

[root@k8s-master ~]# kubectl get pods -A

kube-system metrics-server-545b8b99c6-r68jk 1/1 Running 0 2m41s

5.测试

[root@k8s-master ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-node01.linux.com 89m 2% 1197Mi 43%

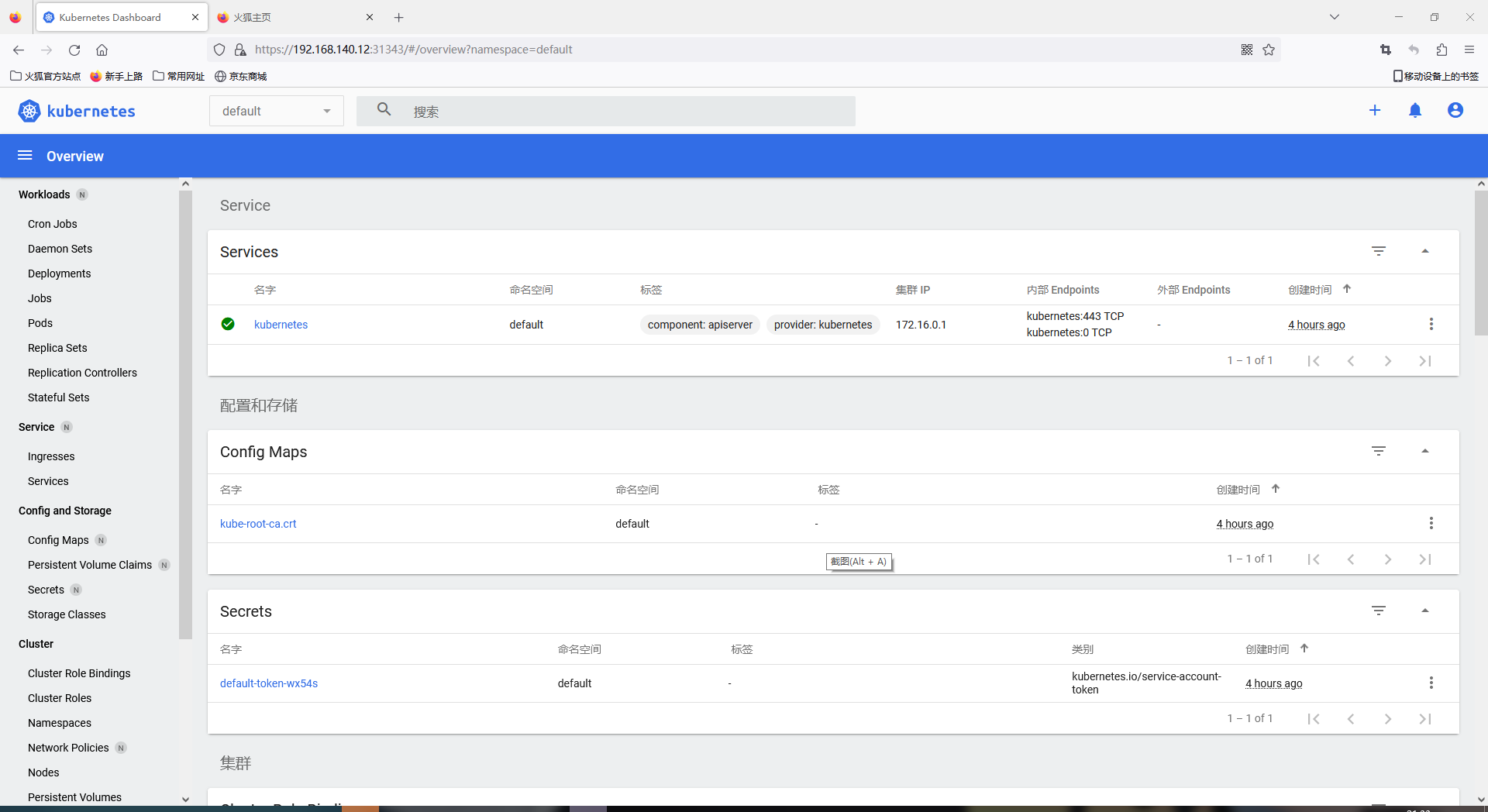

八、部署dashboard插件(可选的)

提供

web UI界面

1.准备文件

下载连接:https://pan.baidu.com/s/14c8lth005DuGjcsBUCJrOA?pwd=ezn7

2.部署dashboard

[root@k8s-master ~]# cd dashboard/

[root@k8s-master dashboard]# kubectl create -f ./

3.将dashboard服务类型修改为nodePort(默认ClusterIP模式,其他机器不能访问)

[root@k8s-master dashboard]# kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

#配置文件不完整,仅展示修改部分

ports:

- port: 443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

type: NodePort #修改为NodePort

[root@k8s-master dashboard]# kubectl get service -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 172.16.90.167 <none> 8000/TCP 2m34s

kubernetes-dashboard NodePort #这里变了 172.16.180.168 <none> 443:31343/TCP #记住这里的映射端口 2m34s

查看运行的主机

可以看到跑在

node02上,我这里node02的IP是192.168.140.12

[root@k8s-master dashboard]# kubectl get pods -n kubernetes-dashboard -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dashboard-metrics-scraper-7645f69d8c-dtsqp 1/1 Running 0 8m37s 192.168.242.130 k8s-node02.linux.com <none> <none>

kubernetes-dashboard-78cb679857-z96n4 1/1 Running 0 8m37s 192.168.242.131 k8s-node02.linux.com <none> <none>

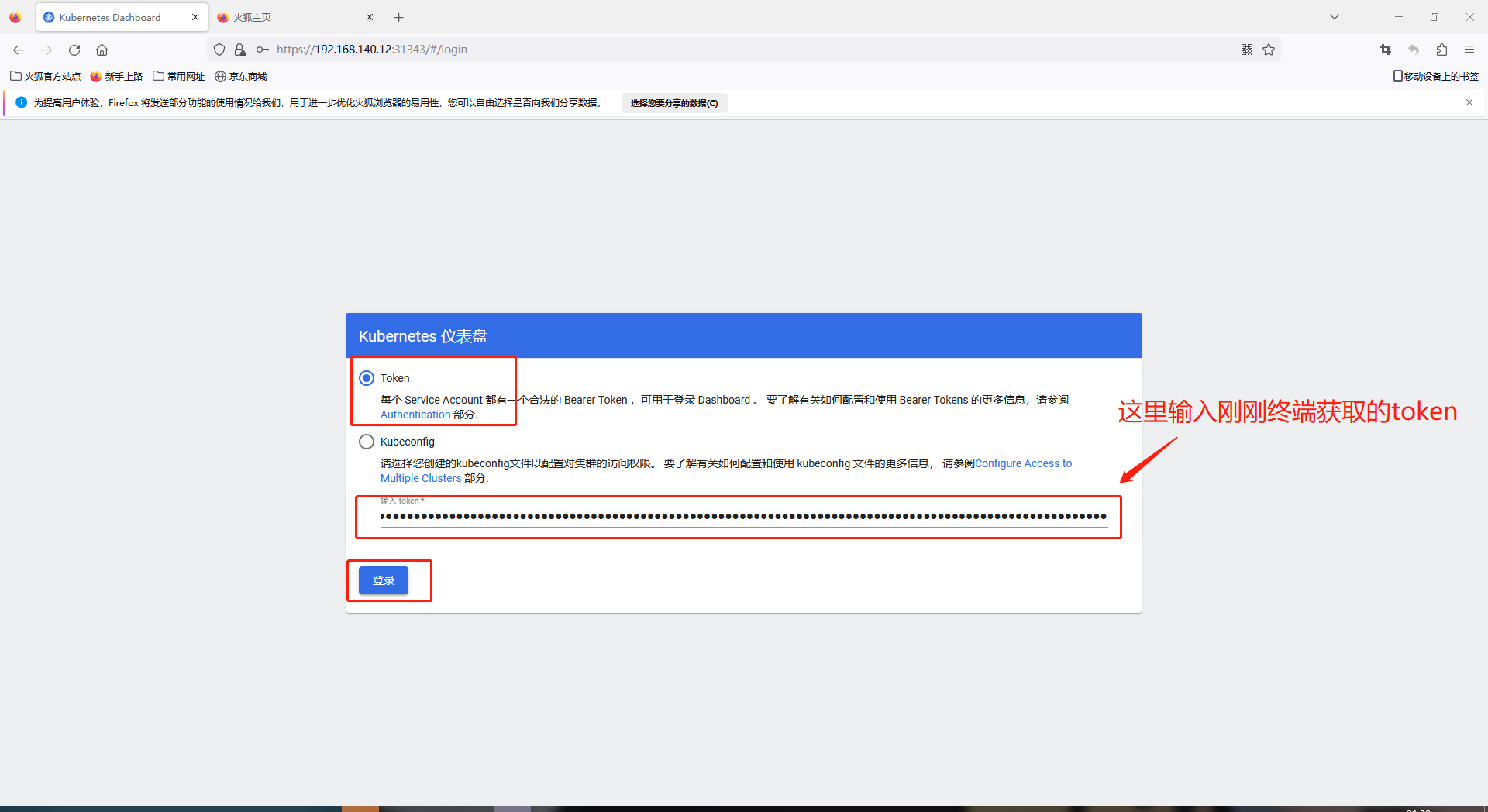

4.访问dashboard

获取令牌token

[root@k8s-master dashboard]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-hdhkt

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: b1b41cb8-0d42-4dfc-826e-ffe9eebac3e6

Type: kubernetes.io/service-account-token

Data

====

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IklVSVl5QVkyRm9EeFNxUHdDY0gzT2phYkxUUklHRlhUcUk3S1JHSDRubEEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWhkaGt0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJiMWI0MWNiOC0wZDQyLTRkZmMtODI2ZS1mZmU5ZWViYWMzZTYiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.jJxwXb73WLTpJHu0CCJLFkp8AYoCn_zuJNWvtYFPvkZirkoGIB8o5AOlmSz9zp5hJDdD_weEksC8NK9CIwDP0botkqcDBGwkVM2GD7xdOZ24K1t4CTkhatNoaRT5cIdeSLgD4_c1ky0BOQS-Et9PYknQHAVI81bNCUpti15tkkPqRdI2-7WnB1SS0cYUm2C_TXcWY_YjRjDSpKp9ZsycbsDLCfGU2C1YWTURbT_m70kTYG2pioSlyvqV1SX9dXKK_jvq50_YkvhiLSuRnPmVaIwlgjE_OxgC8tyC55Us5f0OT5nOamLOdsngG33hYZ6DNXq7atd39W2VU0SyG7EqHA

#记住上面的token,这是我们登录的唯一凭据,每个人都不一样。

ca.crt: 1066 bytes

浏览器访问

node02的IP+31343访问,端口每个人都不一样,请自己查看上面的您的端口https://192.168.140.12:31343/